I. Team Formation and Application Area

Members & Roles

Reno Bowen User Testing Lead Takes primary responsibility to design user studies, recruit participants, and conduct evaluations.

Nicholas Hippenmeyer Development Lead Writes and manages code, oversees GitHub repository, ensures good software engineering practice.

Ilias Karim UI/UX Design Lead Keeps team focused on design process, pushes creative boundaries, leads design of UI and associated assets.

Brad Lawson Documentation Lead Manages the team website and process documentation, takes and shares meeting notes, assists with evaluation.

Keegan Poppen Audio Engineering Lead Responsible for audio engineering

Problem Area & User Needs

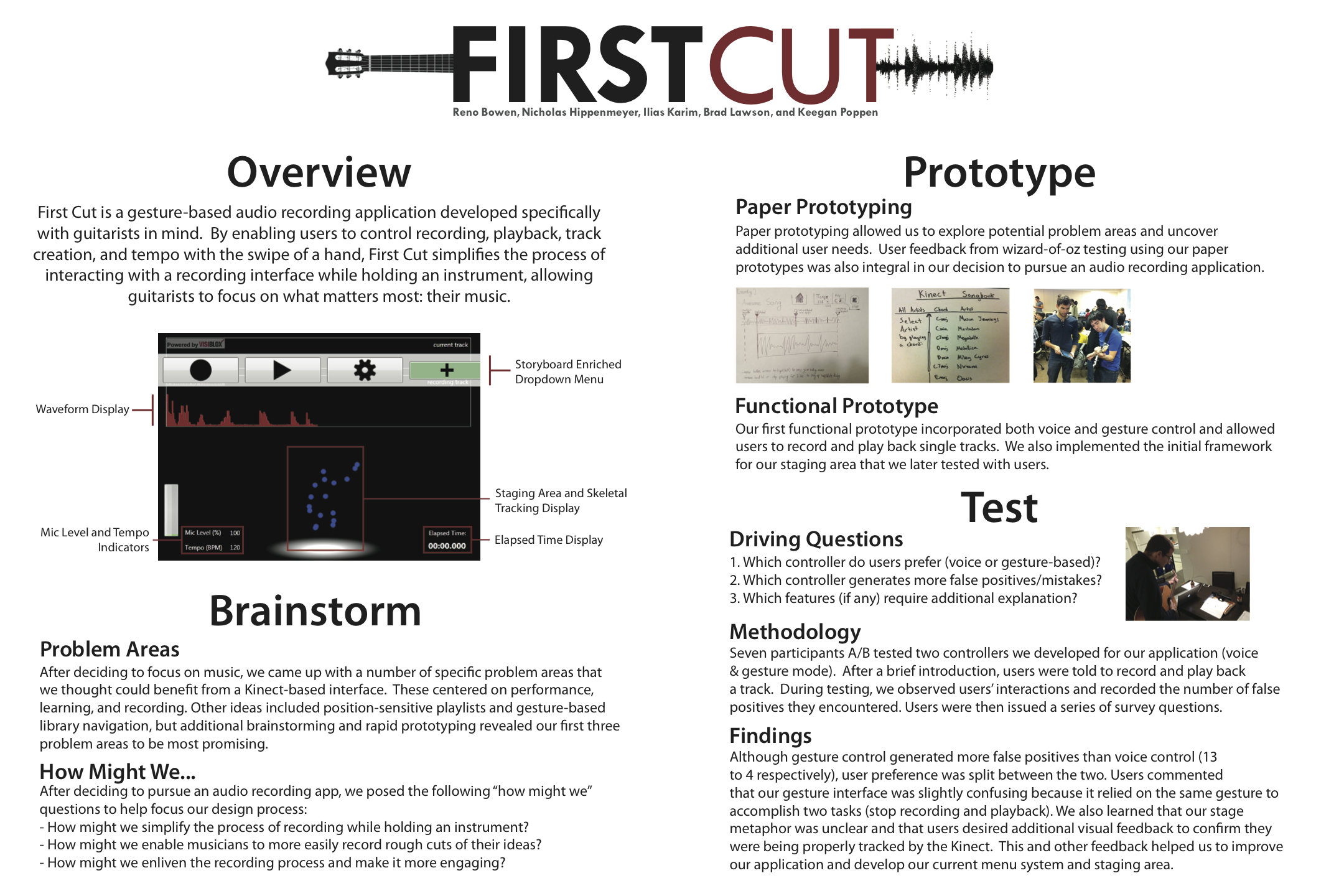

Amateur musicians have benefited greatly from new, cheap recording software and technology. Almost anyone can learn how to use GarageBand or other similar software. However, to control the software while remaining engaged in playing the instrument can be a difficult and frustrating task. How might we make the experience of recording and practicing music at home more seamless? How might we redesign on-the-fly performances?

A Kinect-based interface would allow musicians to more easily record, playback, and loop their audio recordings or practice tracks. Leveraging audio and gestural feedback, musicians would no longer be forced to depart from their instrument to coordinate the recording process by using a mouse to manipulate sliders and knobs.

II. Initial Prototyping

Feasibility Study

Gesture Feasibility

A variety of gestures capable of being performed while playing guitar or drums were demonstrated and evaluated for feasibility. Given the lack of an assembled drumset, a worse-case scenario is evaluated with the majority of the lower torso occluded.

Guitars

With guitars, the right hand can easily be taken advantage of for gestures given its visibility and freedom. In addition, tilting of the neck of the guitar allows the execution of gestures seamlessly mid-play. Foot and leg lifts and extensions can be used as a clutch.

Gestures which might involve lifting the guitar too high can block too many joints, leading to inaccurate and unreliable measurements. If the left hand is to be used for gesturing, it is best used above the neck of the guitar to ensure visibility.

Drums

Drums pose a particular challenge to the Kinect sensor, given that many drumsets will largely if not completely occlude the entire lower half of the performers body. In addition, playing drums involves movement of the entire body, ruling out mid-performance gestures almost entirely.

Fortunately, between-play configuration of the software can be accomplished by using hand-over-the-head as a clutch. Unless someone was a particularly wild drummer, this could serve as a suitable indication that the remaining free hand is to be recorded for 2D/3D gesture control.

A final thought is that the drummer could potentially be recorded from behind. Unfortunately, the issue still stands that they are making use of all four limbs during play. However, it opens up the possibility that given a suitably neat drum configuration, the feet could possibly be used to signal gestures once a clutch has been enabled. However, given the variable amount of space different drummers have behind their drumset, this would be an almost certainly unreliable option. It’s also worth noting that drumsets are very often set up in rooms against a corner or wall (they’re rather space-consuming), making the positioning of a Kinect sensor behind a user impractical.

Analysis of Use Cases

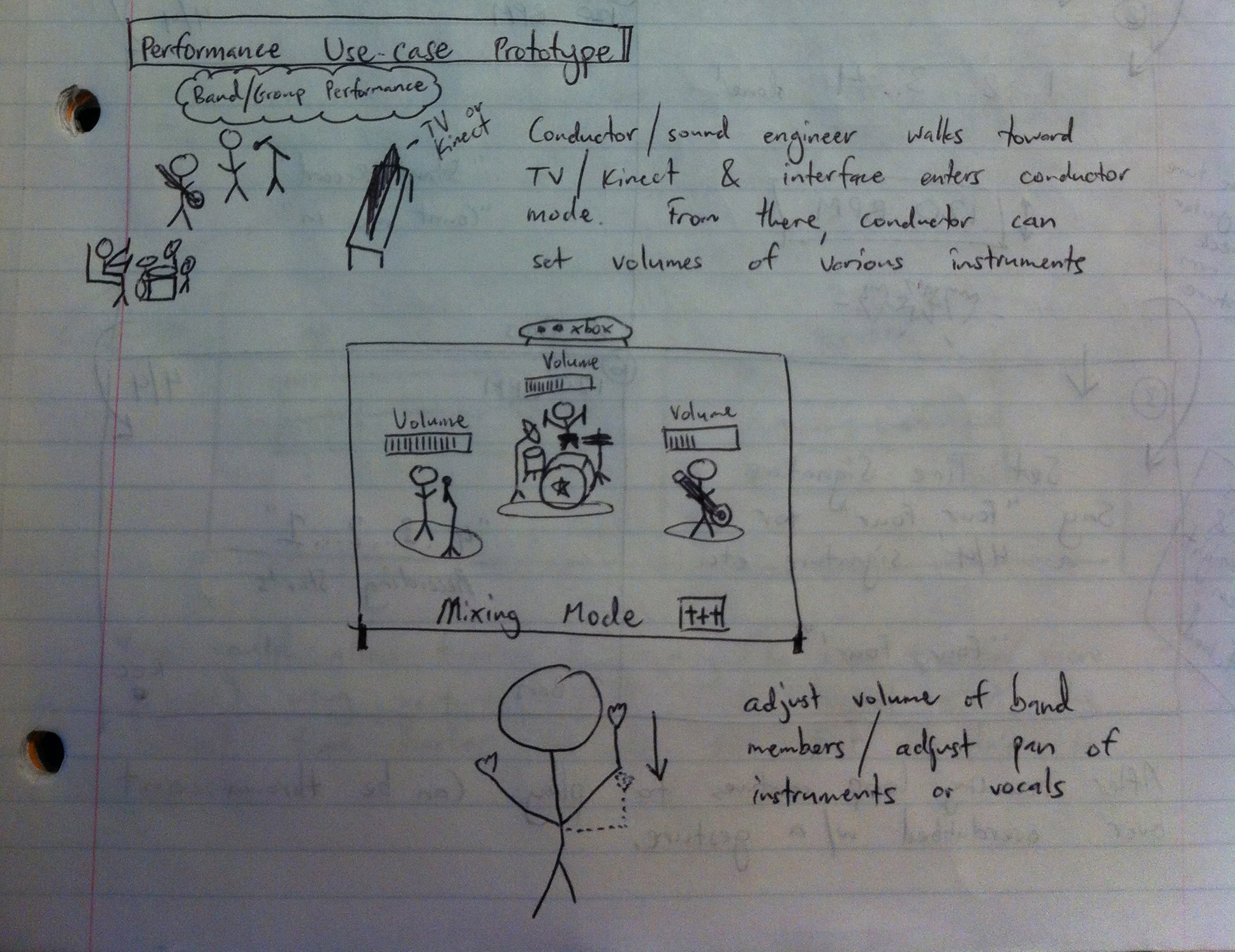

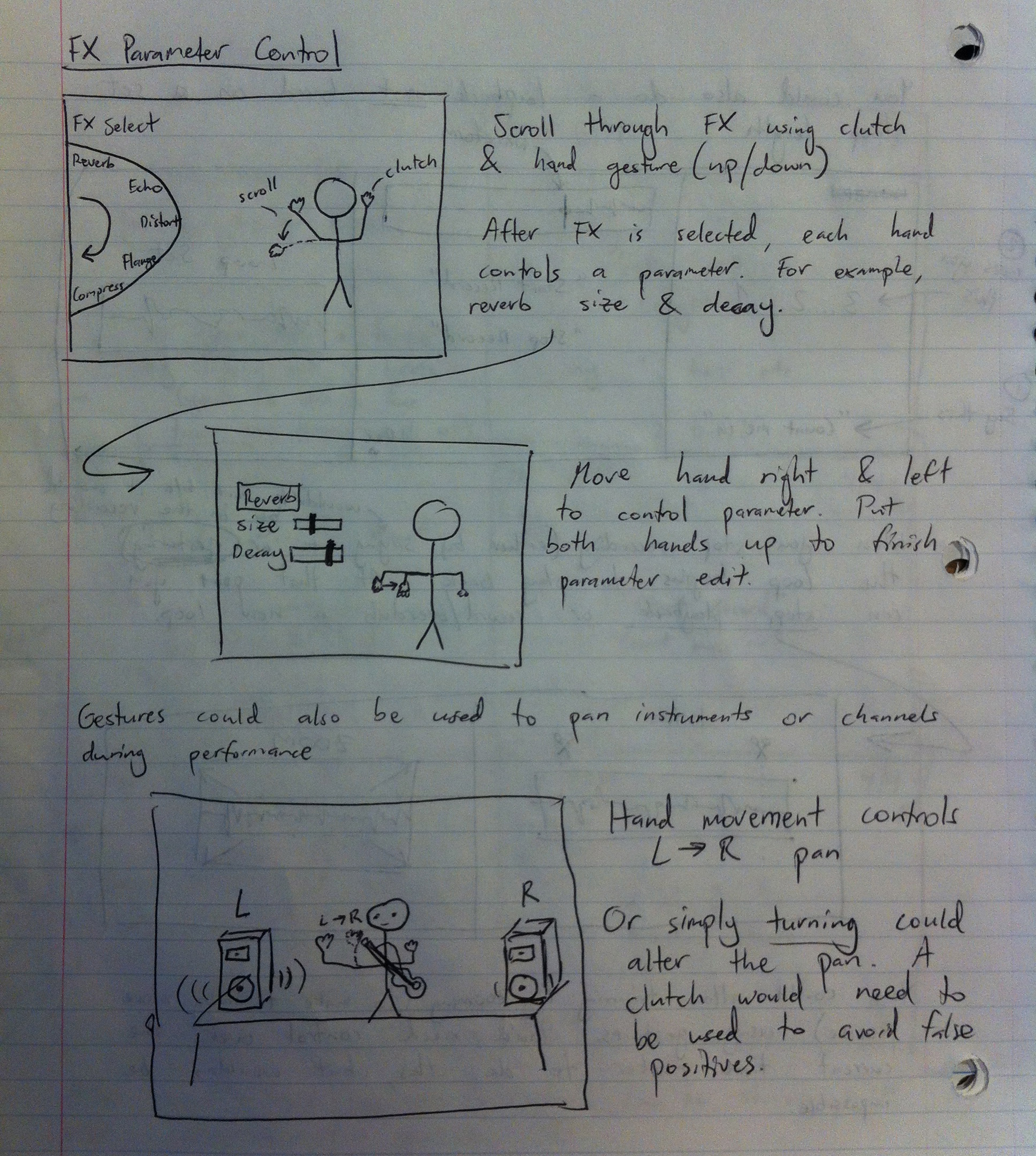

1. Performance

Prototype Sketches

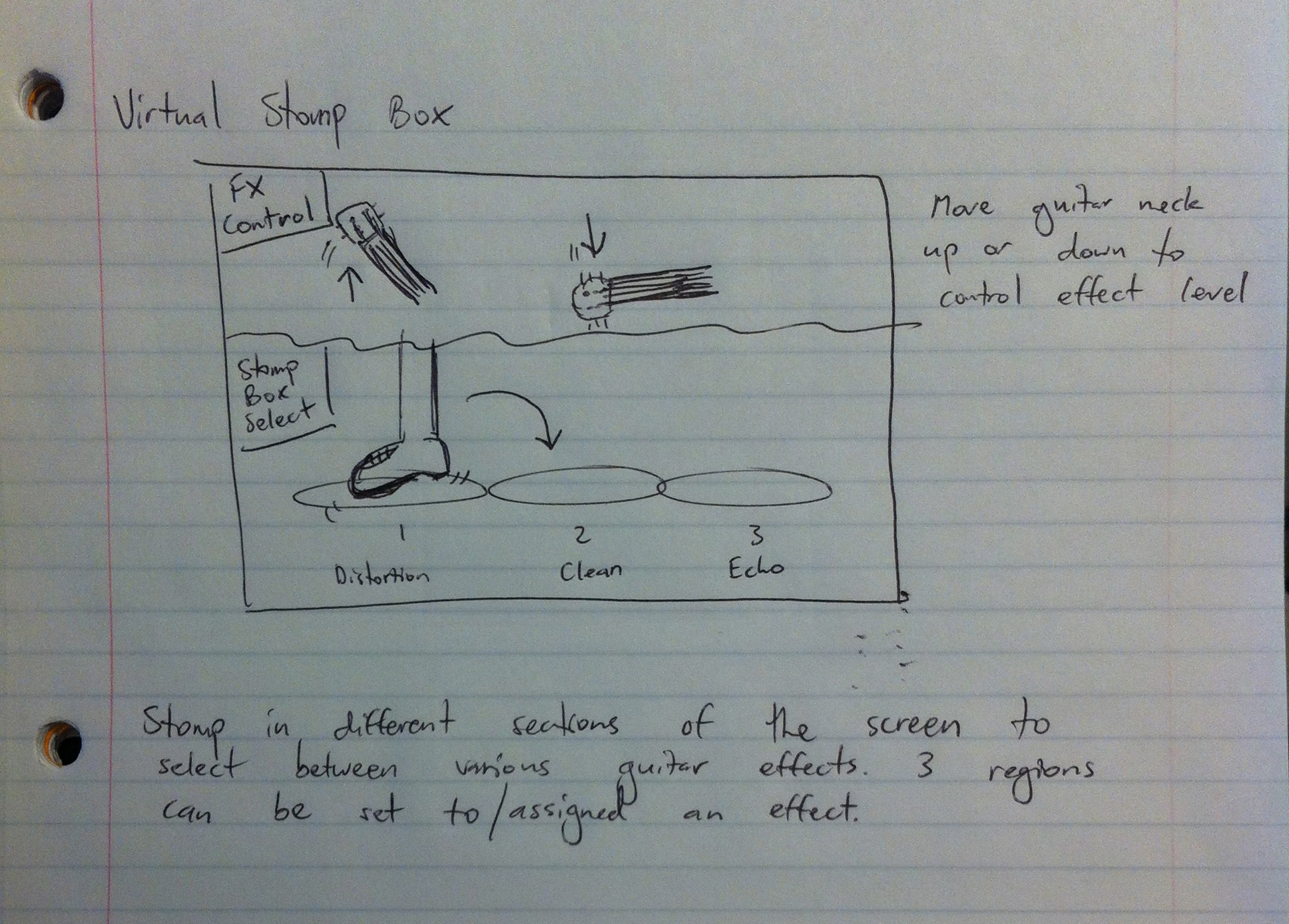

Virtual Stomp Box Prototype

With music as our high-level application area, a natural use-case to peruse was gesture-based performance interfaces. After a few initial brainstorming sessions with the entire group, I delved deeper into musical performance as a specific application area and came up with a number of realistic scenarios in which gestural interfaces would either simplify or improve upon existing methods for controlling volume, FX parameters, and even loopback performance. One idea that I particularly liked--because of its ability to combine a number of promising concepts developed in our initial brainstorms--was a virtual stomp-box. Using the Kinect for skeletal tracking, I thought it would be both feasible and compelling to create a virtual stomp-box for guitar players that uses the stomping of their foot to select between FX regions and the neck of their guitar to adjust the corresponding effect level. Three presets (or possibly more) could be stored to zones at the bottom of the screen (by dragging them from a scrolling list on the left side of the screen) and then after they were assigned, a guitar player could select them by stepping on the respective region. Then while playing, a guitar player could tilt the neck of the guitar in order to control the effect level. This control mechanism would likely result in some false positives--a problem that would need to be addressed in future iterations of the prototype, perhaps by introducing a clutch gesture such as a foot movement--but I thought a key need the interface should address was the problem of allowing guitar players to control effects without taking their hands off of their instrument.

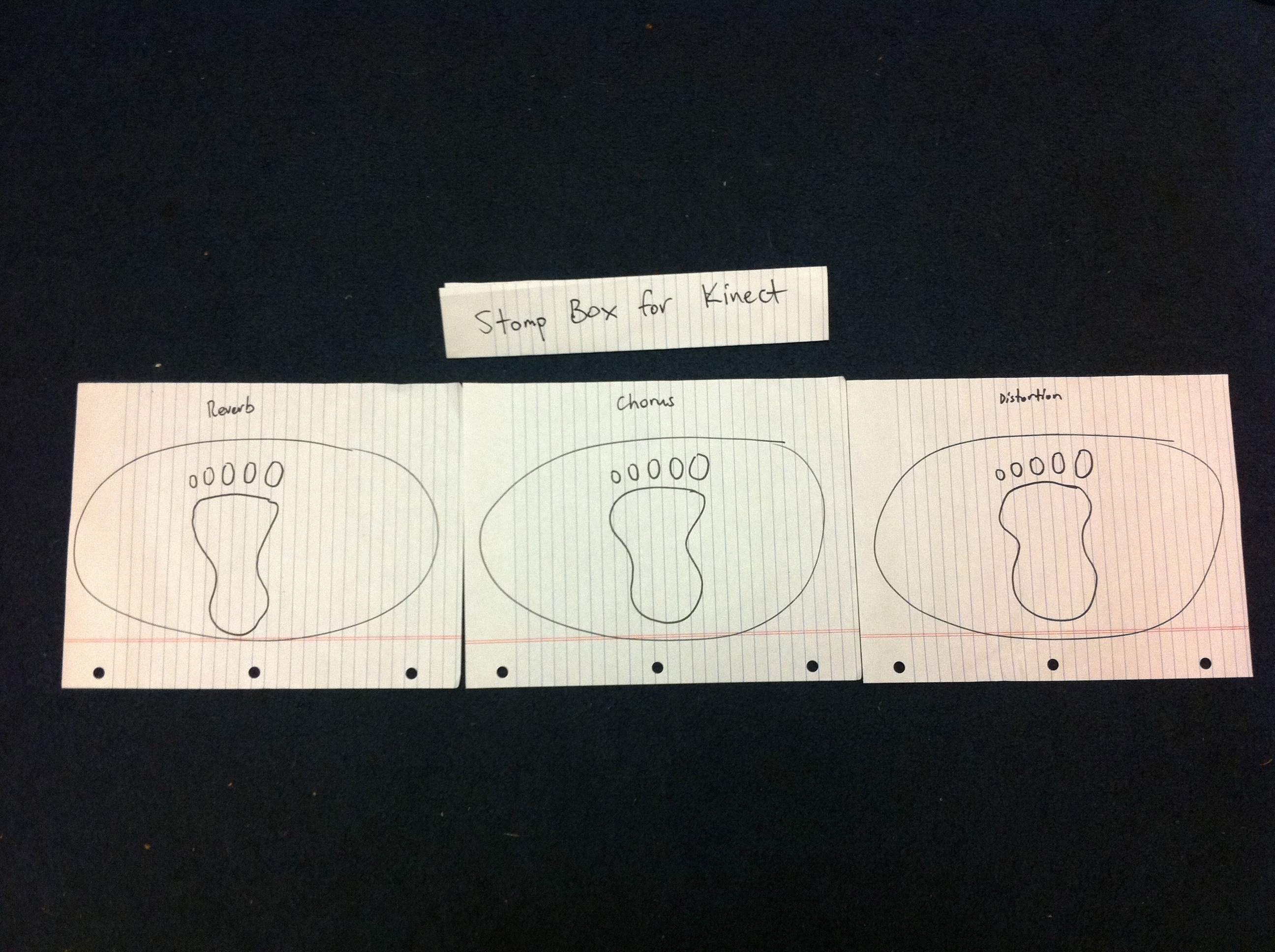

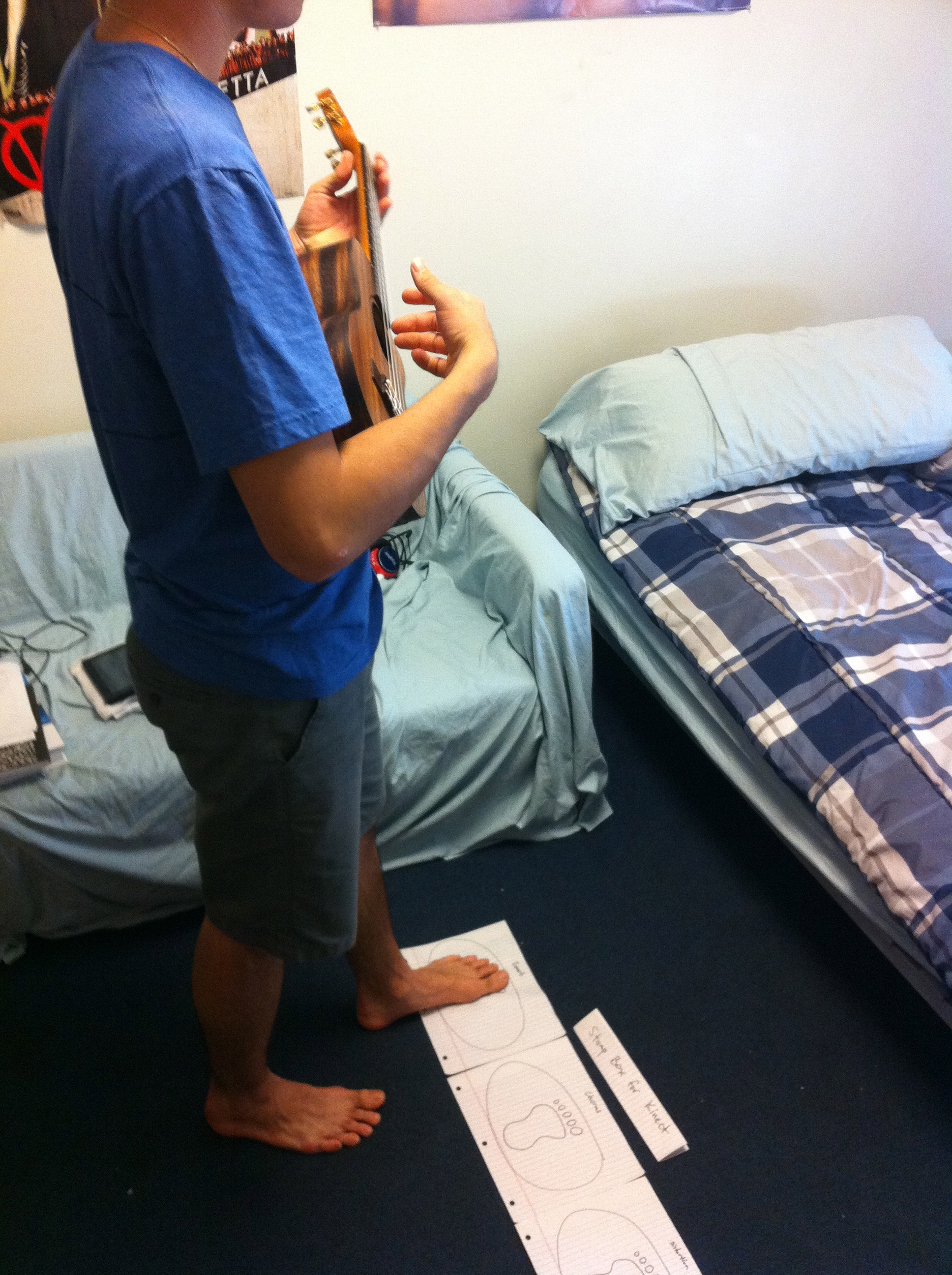

Interview Notes

I presented the idea and general concept of a virtual stomp box to Scooter (a friend who plays ukulele) and set up a rough paper prototype of what I imagined the user interface to be. For lack of a Kinect, my simple prototype consisted of three stomp box regions with corresponding effects. I also told him about the capability of being able to assign different effects to these regions from a list of presets. Here are some of his reactions and comments on the interface and idea:

- "I can see this working if you're standing. But would it work sitting down?"

- "Do stomp boxes usually have 3 pedals or more? I feel like they're a lot more complicated than this, with plenty more effects."

- "Could you use both legs to control the effects? Or would it just be one leg?"

- "Perhaps adding a drum beat or backing tracking would be cool if you're trying to perform alone."

- "It might be weird to not see pedals or the effects regions on stage."

- "Perhaps you could move the effects regions to surround the body (kind of like Dance Dance Revolution) as opposed to having them all in front of you."

Although I couldn't write down all of the things he said, Scooter also talked quite a bit about the lack of visual or mechanical feedback and how it might be confusing or difficult to control effects on stage without being able to see them. I agreed, but then realized that the virtual stomp box could follow you wherever you went. Instead of being stationary and located at one point on stage, the clutch for the stomp box could be moving your foot forward, and then you could stomp down either to the left, middle, or right of your body in order to select from each of the three effects. That way, no matter where you were on stage, you could change effects by simply moving your foot forward and stepping down. Cool!

Scooter also agreed that using the guitar neck to control effects parameters/levels would lead to some miscues and hiccups, but liked the idea of being able to control things seamlessly with a guitar instead of having complex knobs, etc.

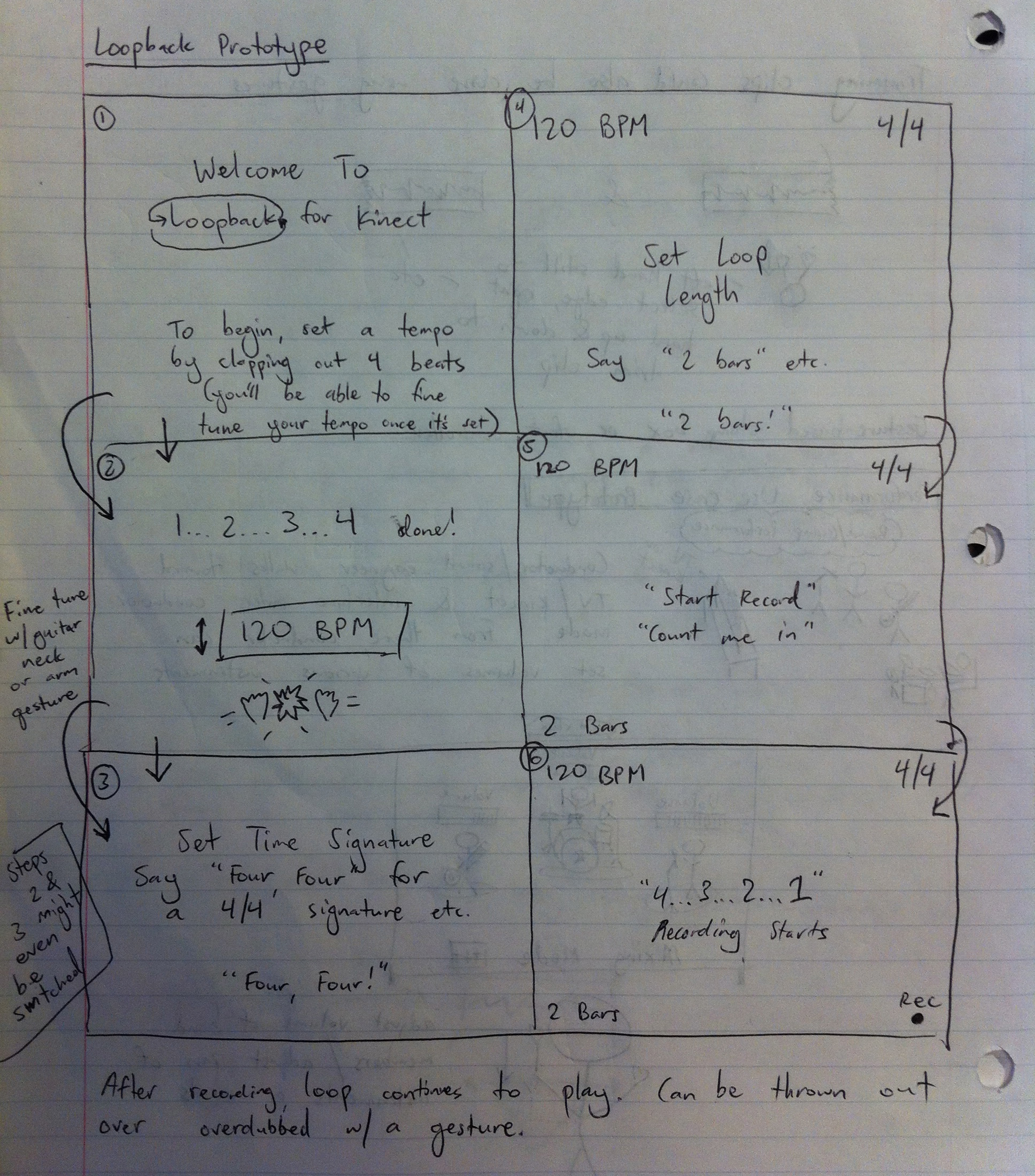

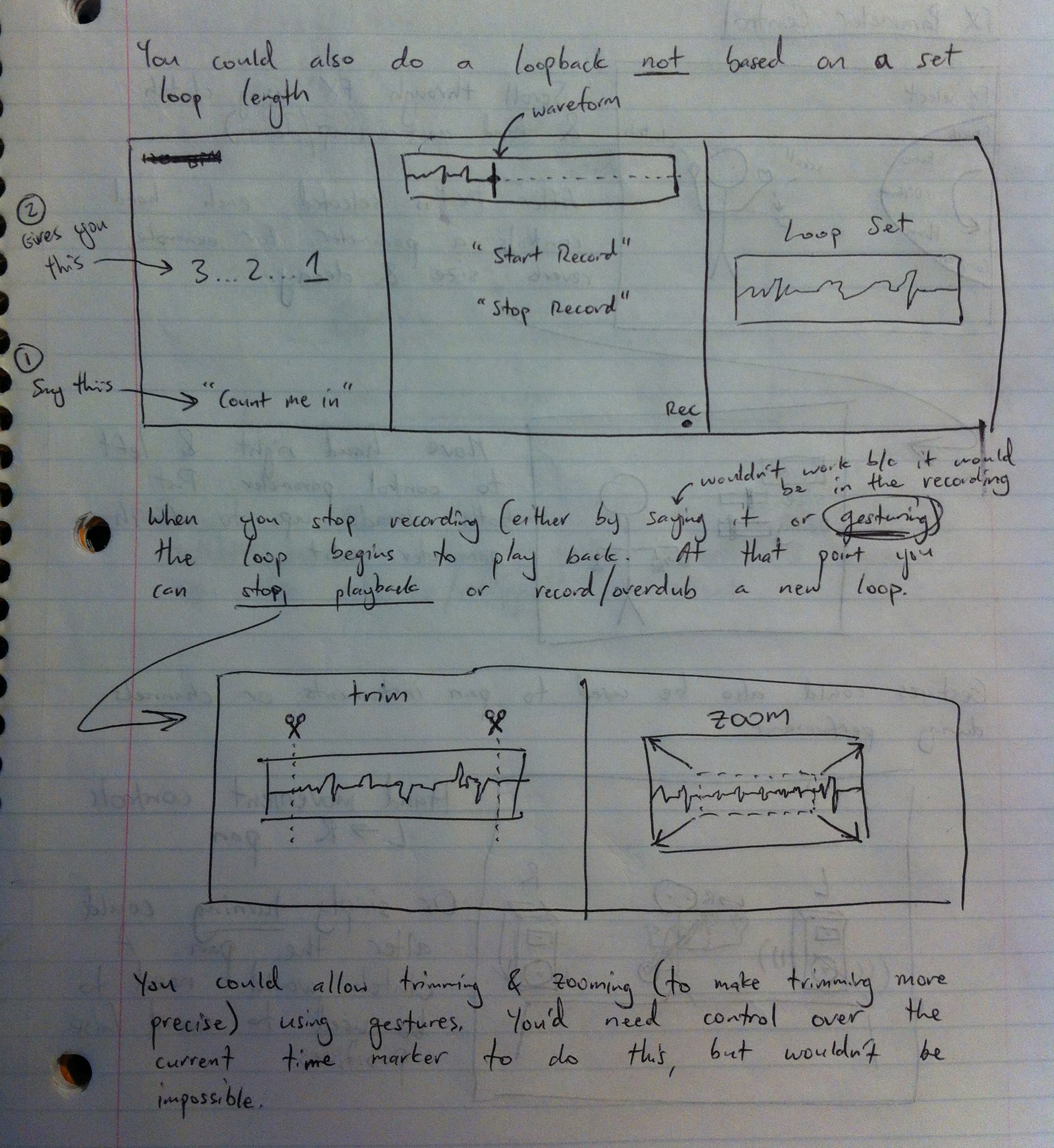

2. Recording

The following prototype explores a way of recording audio while requiring minimal input from the musician.

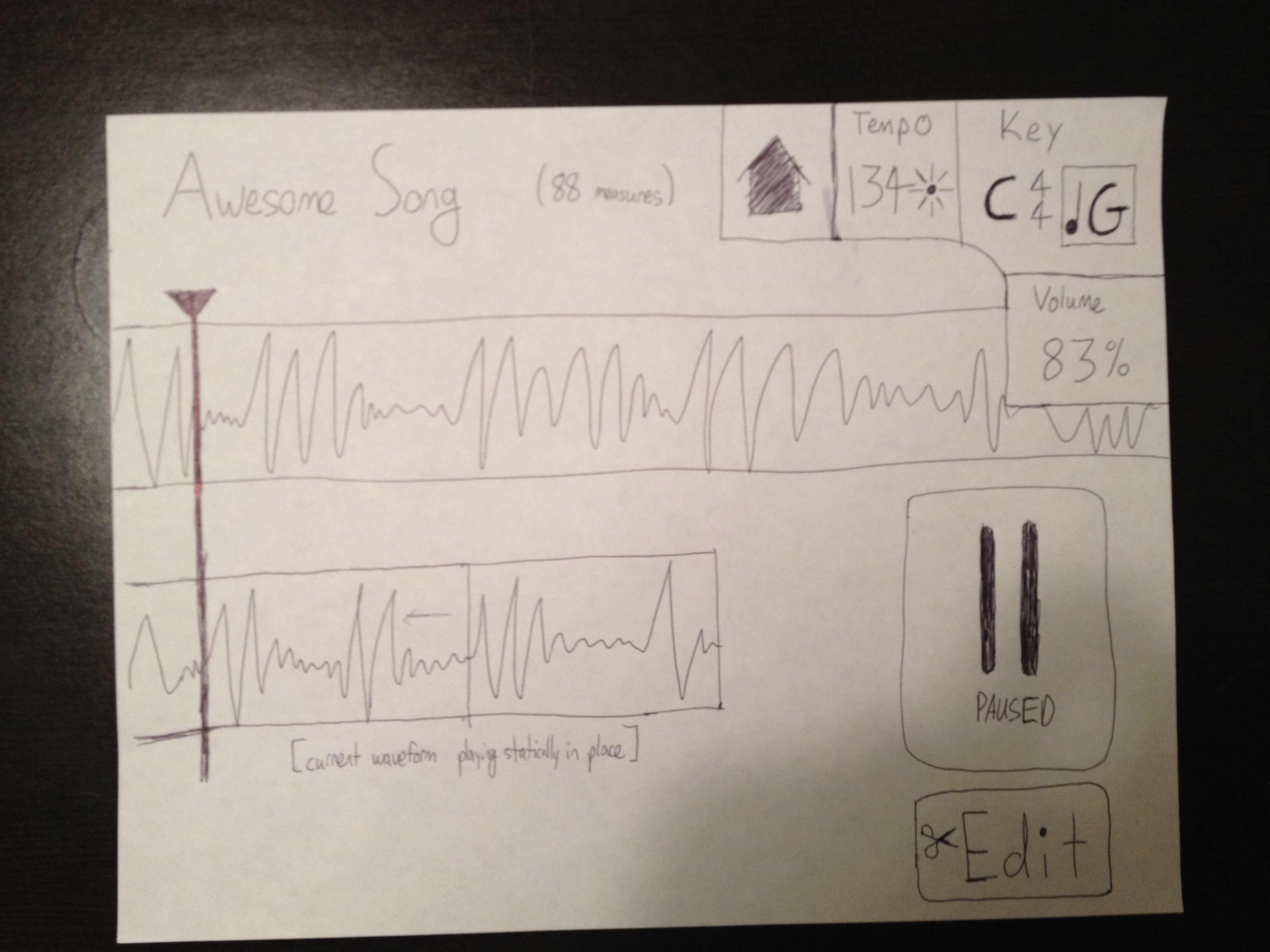

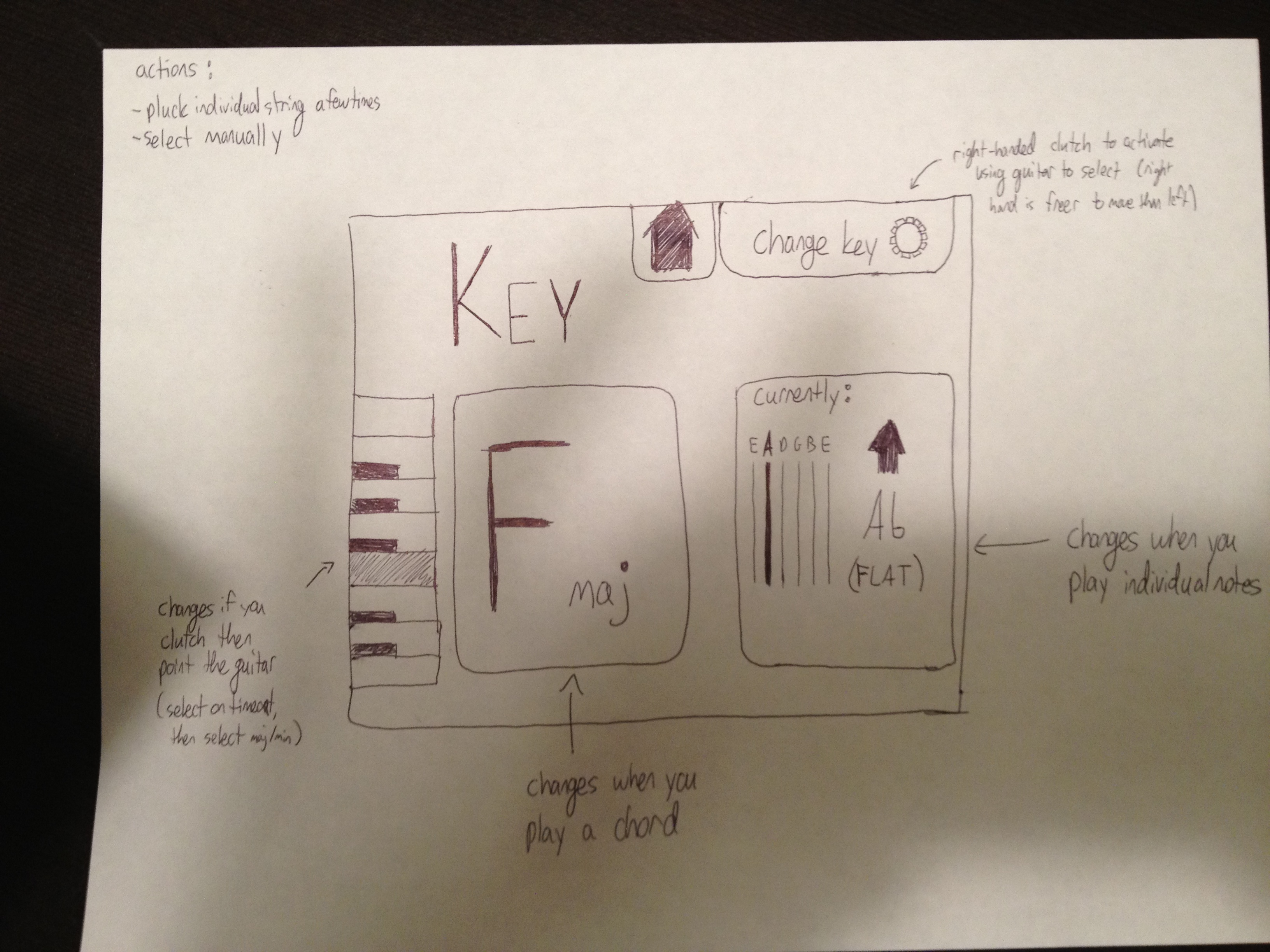

Prototype Sketches and Description

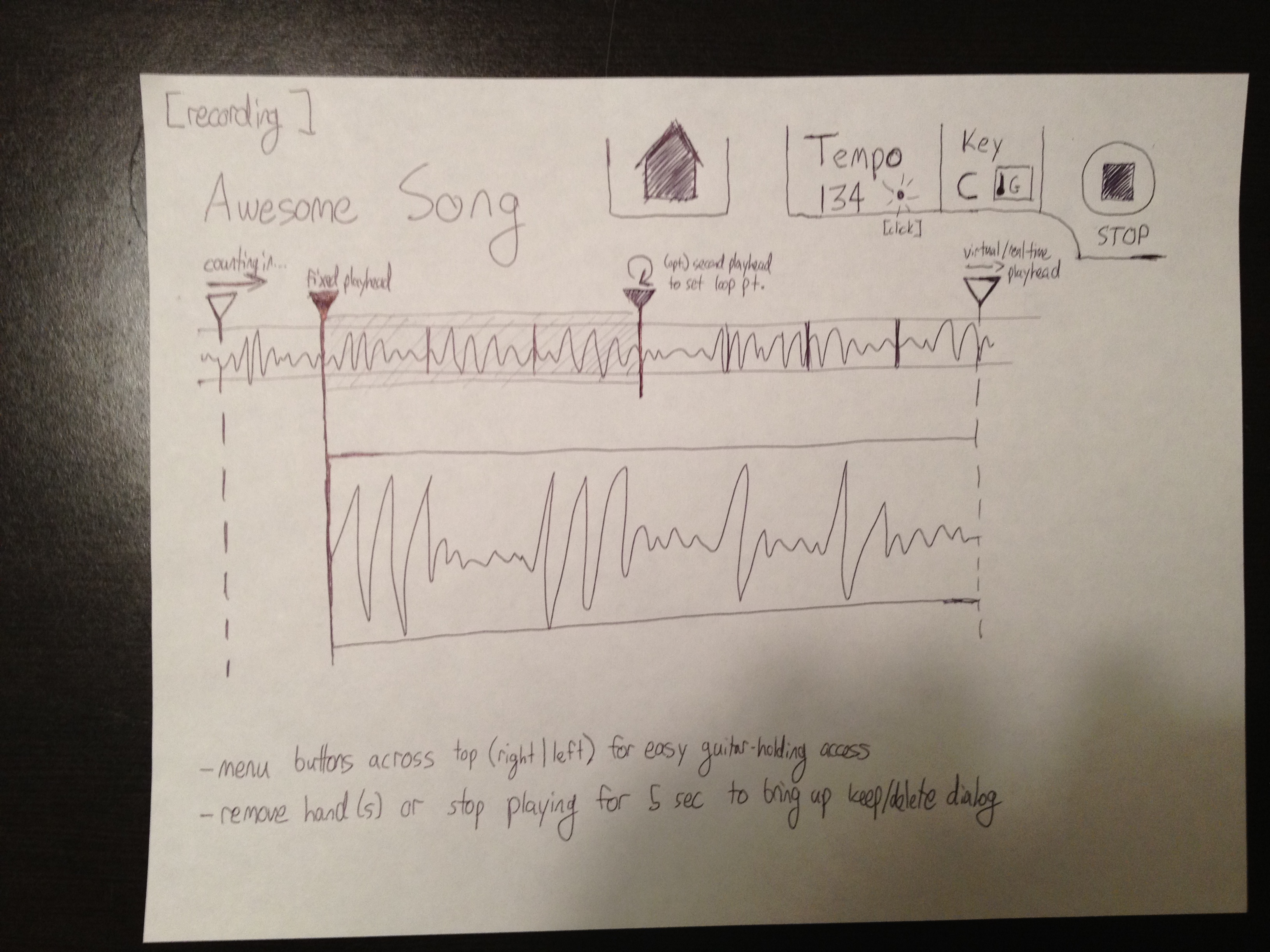

The image below displays the way the app works/looks while you're not recording. The current waveform from whatever is being recorded is displayed at whatever playhead is already set for the track, but passes right through it because nothing is being recorded. You can start recording by hovering over the pause area on the right side, which changes state to record. Note that all of the mode change / settings buttons are across the top right-- the easiest place to reach as a right-handed guitarist-- and could easily be switched to the left side for southpaws. One immediate observation made by the user upon seing this screen was "what is the arrow?" (answer: the "home" button, which is a manual backdoor to conductor mode-- a bit unclear)

Once you start recording, the display changes to demonstrate that recording is happening-- the waveform for what is being recorded starts anchored at the left playhead and extends to the right in real time, counting the user in before starting recording.

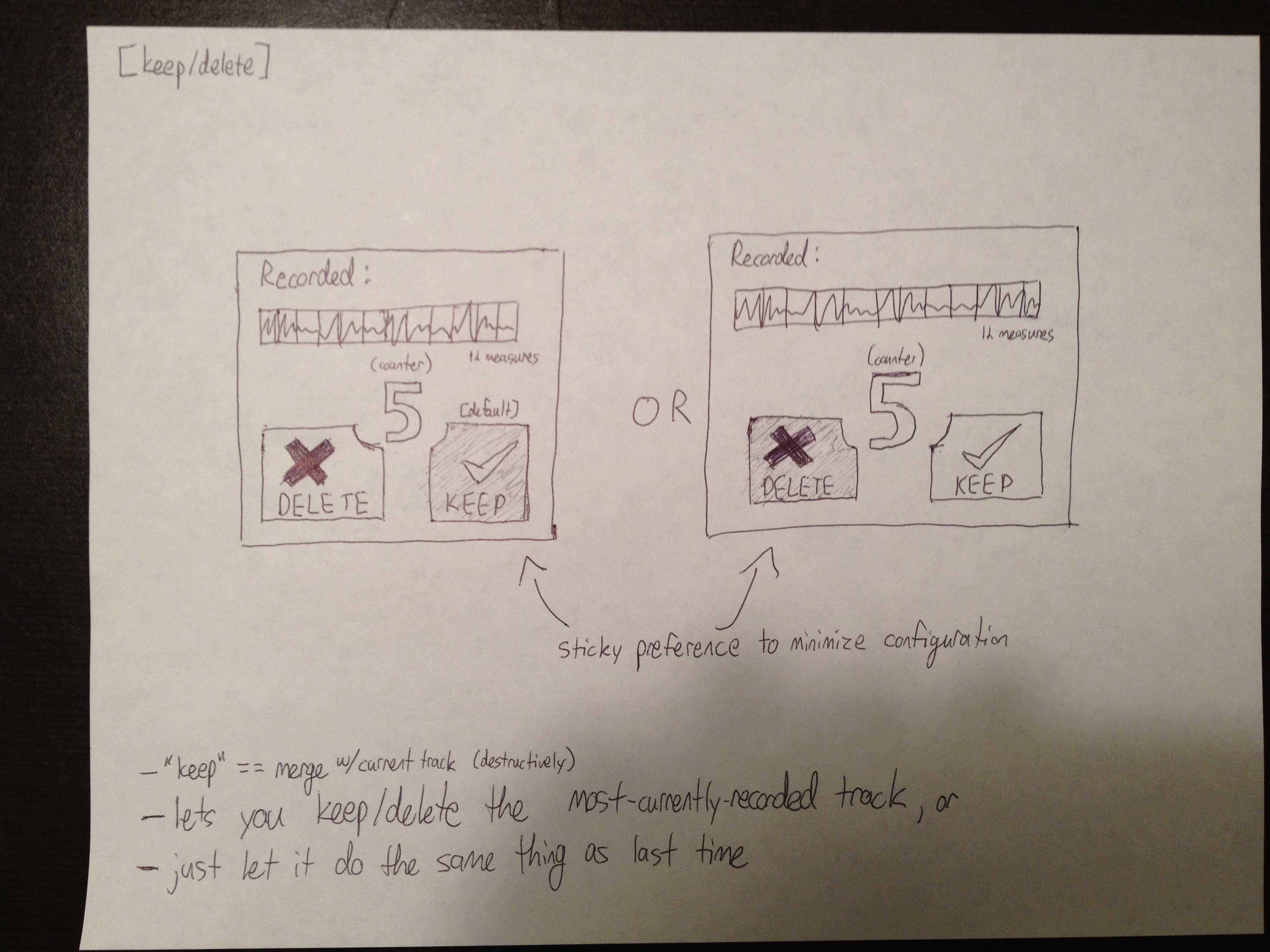

Once you stop recording it automatically asks you if you want to keep what you just played or not, remembering your preference from last time. This dialog times out after a few seconds, so that if the user just wants to sit and wait to start recording again, he or she can without having to lift a finger.

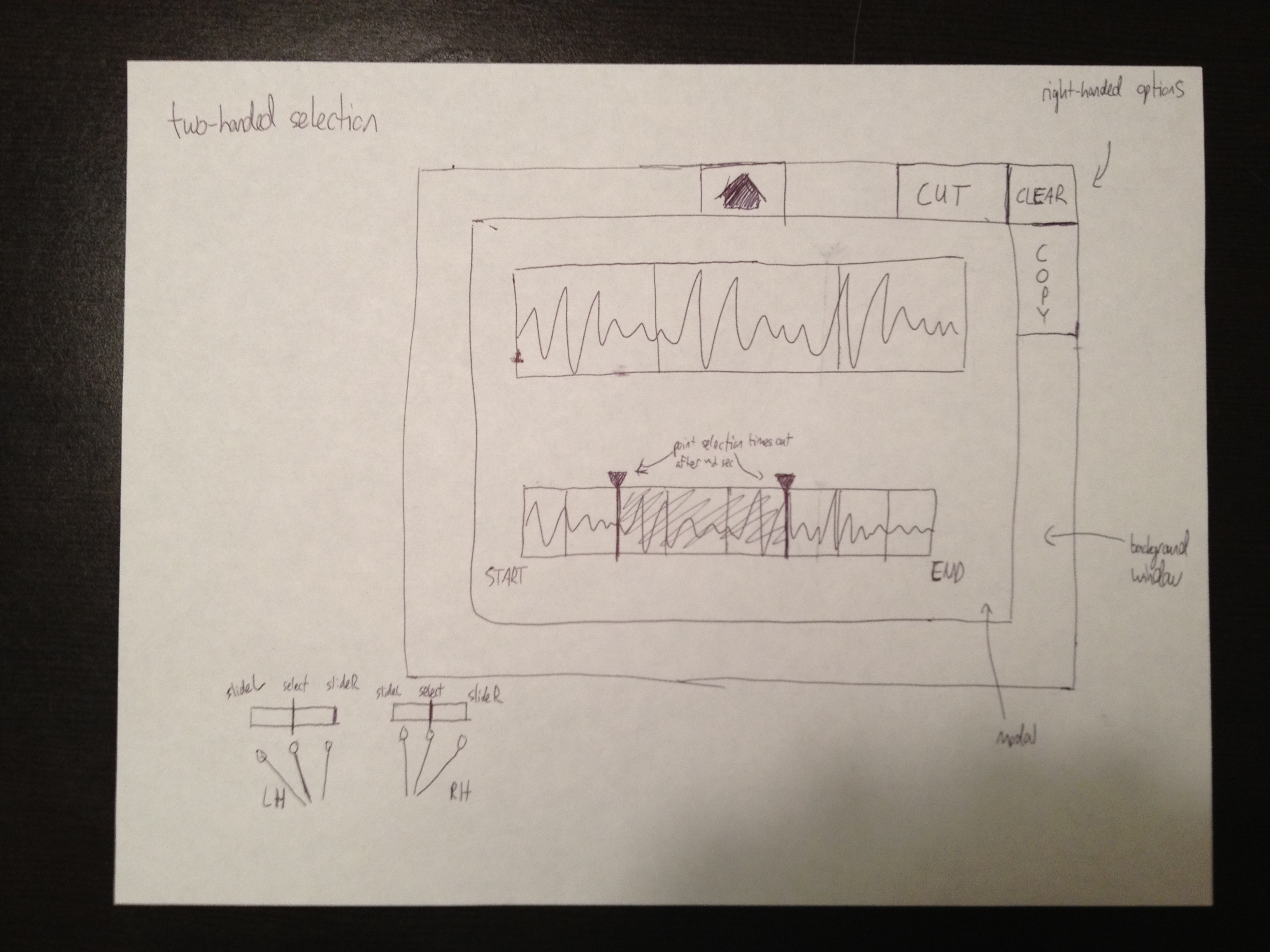

The "change playhead" modal selector comes up when the user puts both hands up, allowing him or her to use either one hand to set the playhead or two to select a range (to either loop over it while recording or to copy / paste / delete it).

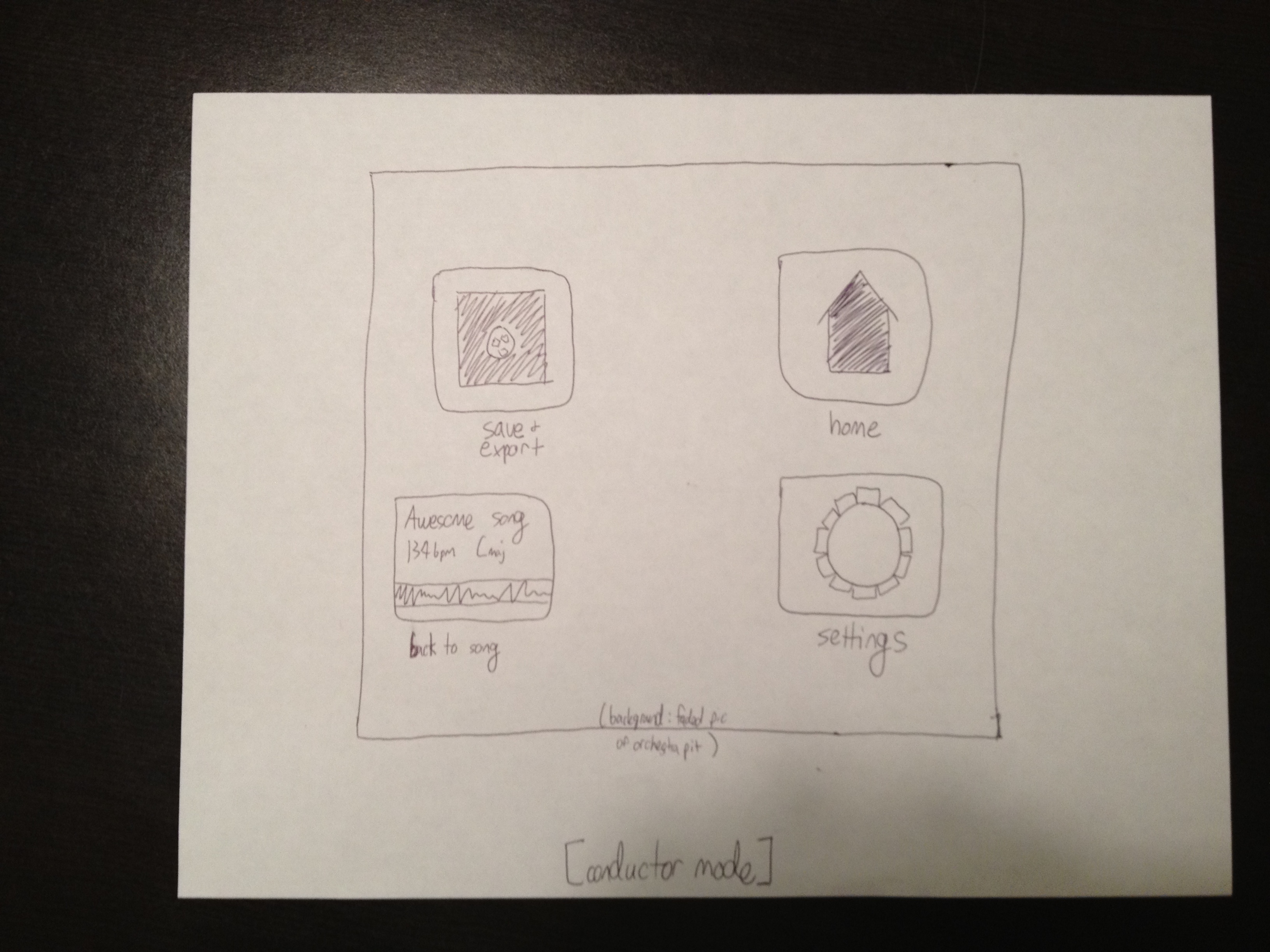

"Conductor Mode" was a first shake at having some sort of main menu that comes up when you hover over the home button at the top of the screen.

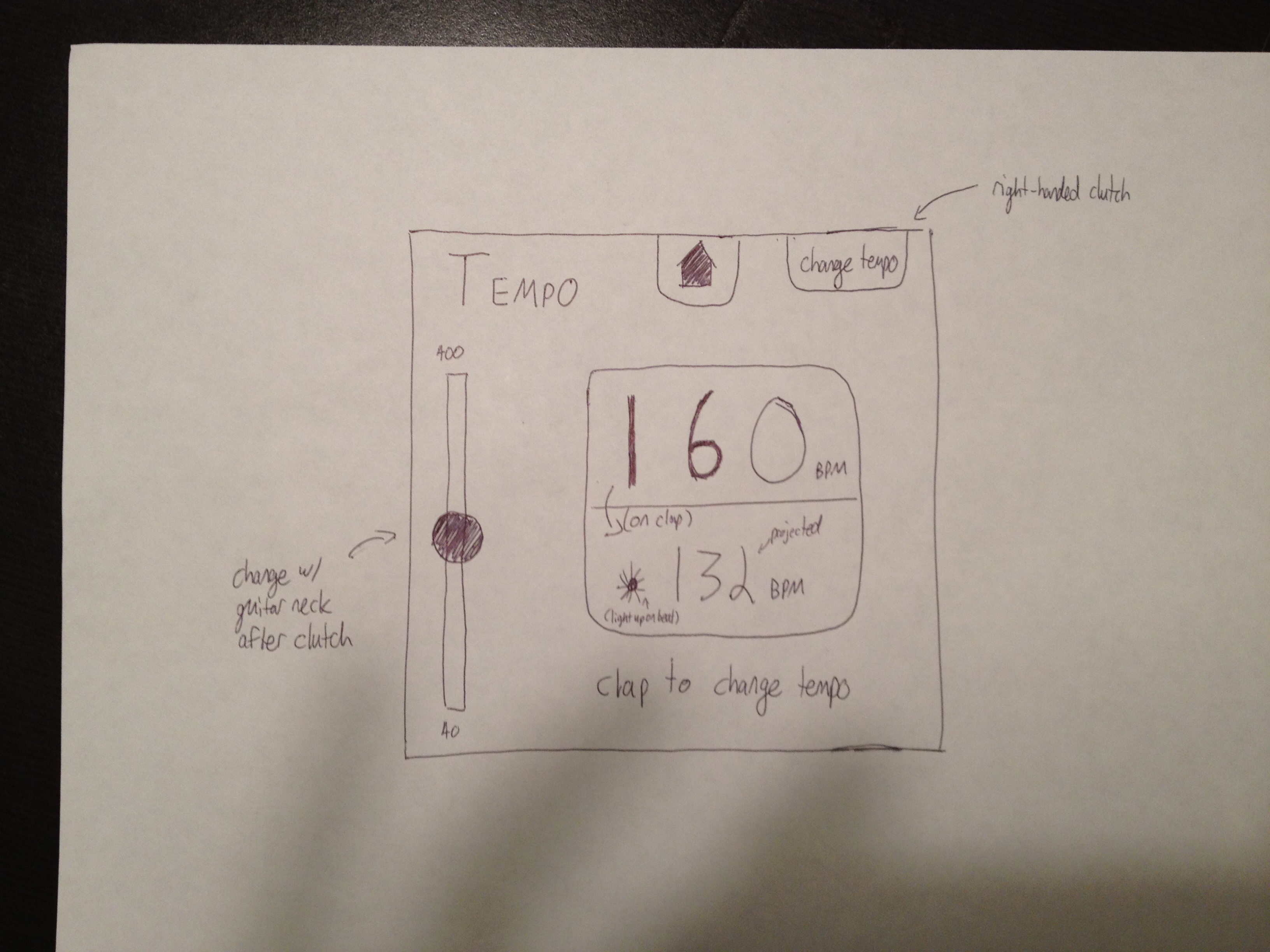

Different modal dialogs that let the user change the tempo and key of the recording by a variety of input methods (guitar neck + clutch, clapping, etc.). The key dialog also features a visual guitar tuner that displays the current note, and whether it is sharp or flat.

User Testing Photos

3. Practice

Overview

Having a songbook that could be flipped through with one arm or a simple clutched gesture would be advantageous compared to bending over a small computer and using a mouse and keyboard while trying to hold your instrument, or after stepping away from it altogether. A brief “wizard of oz” test session run through of the paper prototype verified this hypothesis. Our test user was enthusiastic and liked that he never had to move his guitar out of the way while for example, leaning over a keyboard. He found the gestures easy to learn and use, and not too strenuous.

The best way to encourage learning notes, chords, and scales is probably to “gamify” the process, instead of using them as an interface, using them to controlling a virtual onscreen avatar, for example in a platforming game, not unlike many typing tutor or mathematics computer games. Though navigating complex menus by playing notes could work quite well for trained guitarists, another issue that arises is that such an interface doesn’t truly leverage the Kinect’s capabilities, since it could mostly be controlled with a simple microphone interface. The gesture interface almost feels tacked on, like all interactions should be done be playing the guitar. Perhaps the Kinect could be useful in such a context as a clutch, but it would never be the main means of interacting with the software in such an applcation.

Thus, it is not clear that the Kinect is the ideal interface for music learning or practicing applications. To begin with, it probably can’t teach you how to hold your instrument and move your fingers properly, since the SDK doesn’t provide data on such small joints. It could also be argued that using Kinect gestures to operate such an interface would actually detract from the learning or practice application, since any gesture that would rely on the Kinect’s unique detection capabilities would require the user to gestate in a manner not inherent or necessarily applicable to playing the instrument they are learning to play, for example with their legs or by removing at least one hand from their instrument.

Some of the ideas for a learning or practice application utilizing a kinect interface that we brainstormed, sketched, and prototyped show promise. The tuner could be useful implemented with a natural user interface, but it is difficult to justify a standalone Kinect tuning application. If anything, the tuner should be a feature of some more full-featured signal processing or mixing application, which could be useful in other contexts like recording and performing.

Prototype Sketches

User Testing Photos

Conclusion

Through a series of feasibility tests, we determined that the Kinect could reliably be used to detect many gestures a guitar player might use to control a gestural interface. A drummer however posed problems for the sensor since most of the body is obscured by the drum set. As a result, we decided to focus on potential use-cases for a guitarist, and prototyped various applications within the following the three areas: performance, recording and practice.

The virtual stomp box proved to be a promising idea for a guitarist performing on stage, since it would allow the performer to move around freely and still provide the flexibility of changing effects mid-performance. However, the lack of visual feedback could be an important limitation for this idea. The gestural interface explored for recording audio also yielded positive feedback. This type of application could potentially provide a lot of utility for a musician, since recording audio tends to be an interactive process where many takes and frequent editing may be needed. Finally, it was determined that a Kinect-based interface would not be ideal for learning applications. A tuning feature could however be very useful as a component in some other music-based application.

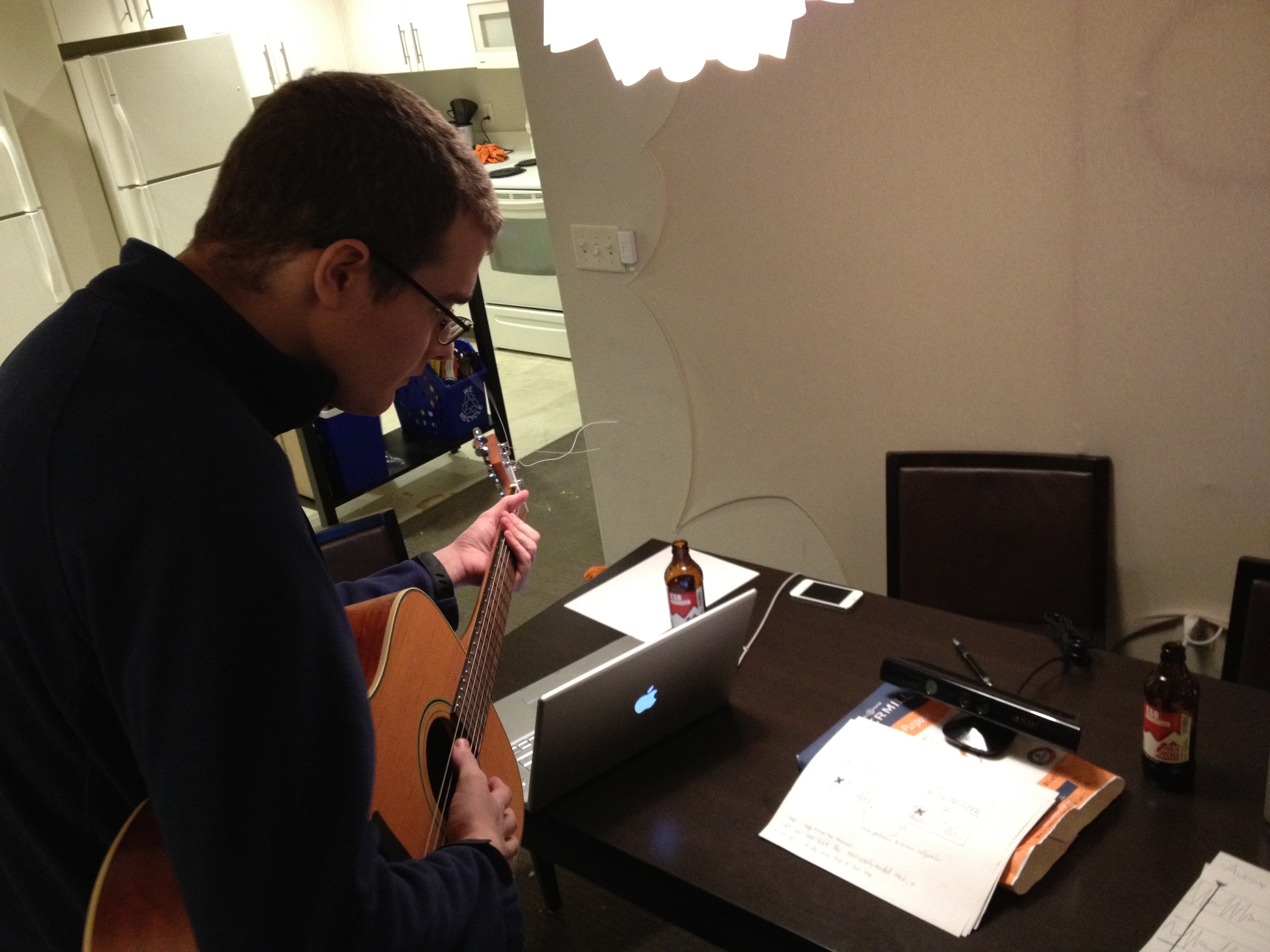

III. Wizard-of-Oz Testing

For our Wizard-of-Oz testing, the two tasks we focused on were learning and audio recording, specifically as they apply to guitar players. We chose these two tasks because we felt they were the most promising in terms of feasibility, the potential to address user needs, and their suitability to gestural-based interface control. The first task, learning, was based on a song-book interface that allowed users to learn how to play songs by scrolling through a list of available tracks and selecting their desired track by strumming a corresponding chord. The second task, recording, enabled users to create simple audio recordings, set tempo, save and export files, and make basic edits on clips.

Navigation of the song-book interface was fairly straightforward when it came to scrolling and moving between pages. Scrolling required users to slide their left hand down the neck of the guitar as a clutch and then scroll up and down using their right hand. After they had found the song they wanted, they could select it by playing the corresponding chord that was displayed next to the song title. This seemed like a reasonable way to select songs at first, but our wizard-of-oz testing revealed that requiring users to strum a specific chord in order to select a song (such as A minor to start learning a song by Nirvana) made it difficult for beginners to navigate our interface because they had no prior knowledge of guitar chords. Luckily, users could gesture for help by raising their left hand above their heads, which brought up a diagram that showed them how to play the specific chord they needed. In this way, our application helped users learn new chords even as they selected songs. However, as testers also pointed out, we would have to achieve a balance between teaching users new chords and allowing them to select the songs they wanted without getting bogged down too much.

It was difficult to record reliable Kinect data because there were so many people in the room during lab, but we managed to record a few realistic performance scenarios in which guitarists navigated through our song-book, selected a song, asked for help by raising their left hand, and adjusted the playback tempo in order to make it easier to play along (by moving both hands up or down). We did this using an app skeleton that we built for our project that allowed us to record the various input performances for later playback. Even though our testers were working from paper prototypes, our launch screen/diagram explained all of the necessary gestures in such a way that our users were able to execute them fairly easily each time.

One of the big things we learned during our initial wizard-of-oz tests for the learning task was the fact that the Kinect often mistakes the neck of the guitar for the musician's arm. This could certainly cause problems, especially with gestures that involve moving one's arm in close proximity of the guitar neck. With that in mind, we determined that the strumming hand is far more effective when it comes to gesturing because it rarely gets occluded by the guitar.

In addition, one tester pointed out that on-screen diagrams should match the orientation of the guitar player to avoid confusion. For example, if someone is playing guitar right-handed, any diagrams or images featuring a guitar player should be oriented the same way on screen. Our second task, recording, also allowed us to capture a few realistic usage scenarios in which users were prompted to start recordings, edit tracks they had laid down, and delete or keep recordings they had just completed. We were again working from paper prototypes, but we still managed to record some input performances that will hopefully be useful in future iterations of our prototype.

One thing that we learned about recording and playback is that controlling playheads and cropping clips is a fairly intuitive process in a gestural-based interface. For example, to adjust the playback/recording head, we hardly had to instruct users to grab it with their hand and move it back and forth in order to adjust its positioning. For the most part, they did this instinctively. Editing tracks was very similar, as users simply had to grab each end of the clip and move their hands back and forth in order to trim it down.

Another thing that we learned about recording during our wizard-of-oz testing is that holding a guitar makes it fairly difficult to gesture with two hands (because it can get in the way and/or get tracked by the Kinect as we found out during our first tests). This requires most two-handed gestures to be done predominately above the shoulder line in order to assure the user's arms are being tracked properly, which can cause fatigue if done for a long period of time. In future iterations of our prototype, we will have to keep these limitations in mind, and revise any gestures accordingly.

We learned quite a bit about each interface idea during our wizard-of-oz testing, but from the results we recorded and observed, we feel like the recording task has more potential in terms of a gestural-based music app. Although the song book would likely make for an interesting application, we feel that an audio recording app could more effectively leverage the Kinect's gesture sensing capabilities while allowing users to intuitively start/stop, edit, and save recordings. Many of the questions raised during our wizard-of-oz testing will likely drive future iterations of our prototype, and we look forward to exploring them in milestone four.

IV. Functional Prototype I

Feature Choices, Rationale, & Implementation Progress

For the first functional prototype of our guitarist-friendly music recording interface, we chose to implement the following features:

-

Voice-activated audio recording interface

- Say "record" to start recording (while on-stage)

- Step off stage to stop recording

- "Play" to cue playback

- "Stop" to stop playback

-

Gesture-based audio recording interface

- Swipe right to record

- Swipe left to stop recording

- Swipe left to start playback

- Swipe left while playing to stop playback

- Stage indicator and clutch

- Waveform display

- Microphone input level display

- Microphone input level adjustment

- Elapsed time display

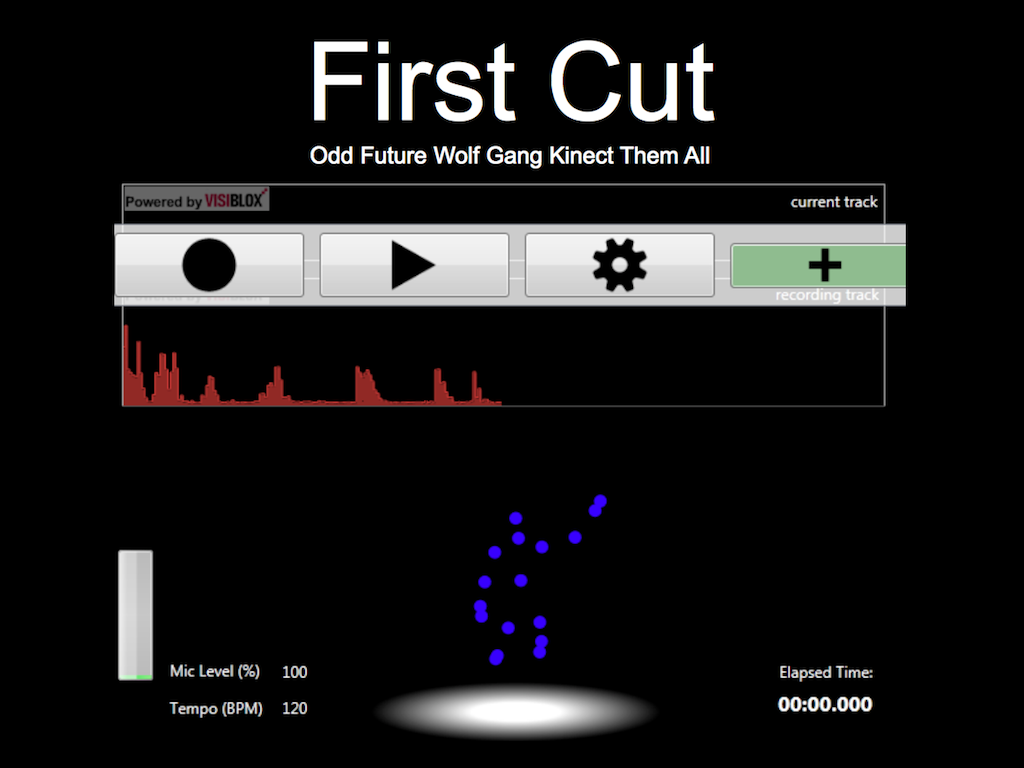

While our main focus in terms of features was getting our recording interface up and running and allowing users to start recording, stop recording, and play back recordings, we also implemented two different controllers for our application. The first controller utilizes a combination of voice commands and a clutch (based on the position of the user's head) in order to determine if he/she is "on-stage" or not. As the user moves around in front of the Kinect, the position of their head is tracked and displayed on screen. If a user is "on-stage" (i.e. - their head is centered relative to the Kinect), the indicator at the top of the screen turns from red to blue, notifying them that they can now start recording by saying "record." Recording can then be stopped by stepping off-stage. The rationale behind this design decision was to eliminate false positives by ensuring that voice commands are only detected when someone actually intends to use our app. As far as the choice to track the user's head goes, we determined that head position is a good indicator of body position and a guitarist's head is also hardly ever occluded (as opposed to their waistline), so the Kinect can reliably pick it up.

Our second controller is entirely gesture-based and relies on swiping motions in order to start/stop playback and recording. The rationale behind this came mainly from our wizard-of-oz testing where we discovered that one-handed gestures are natural for guitarists to perform using their strumming hand. We also feel that a swiping gesture is really easy to remember, isn't too fatiguing, and can be reliably detected using the Kinect.

Another feature that we chose to include was a waveform display that traces out the actual wave that is being recorded by our app. This gives users visual feedback and confirms that they have in fact started or stopped a recording. We are also thinking about including a playhead marker that indicates playback position so users know exactly where they are in a track.

Our microphone level adjustment allows users to control the input volume of the microphone using a left-to-right slider, which can be confirmed by looking at the microphone input level display. The rationale behind the slider was to give users greater control over their audio recordings and allow them to eliminate clipping (or distortion from the input level being too high). This is especially important when recording instruments because clipping can make even the best guitarist sound awful.

The elapsed time display is similar to the waveform display in that it offers users visual feedback and confirms that audio is being recorded. It also lets users know how long they've been recording for and could be used in the future to make fine adjustments to the playhead position.

Remaining Implementation Ideas/Design Issues (from high priority to low priority)

- Reorganize audio recorder to better support multiple tracks

- Better understand Sample Aggregator implementation to represent multiple waveforms

- Simultaneous playback and audio recording

- Being able to scroll through wider waveforms rather than having them cut off at the end of the screen

- Navigating to a specific point within a track

- Exporting audio into a convenient format

- Microphone selection

- Add effects (distortion? flange? echo?)

Sources Cited

V. User Testing

Introduction

Our group’s motivation is to better understand the feasibility of voice and gesture-based controllers for an audio recording interface. Most importantly, we want to continue developing an application that allows musicians of all abilities (and specifically guitarists of all skill levels), to quickly and seamlessly record themselves with minimal interruption and frustration. In terms of goals, our primary aim is to find out if users prefer one controller to the other and if they believe there is a blend of the two that could maximize efficiency and ease of use. Another goal is to discover which controller generates more false positives and attempt to reduce or eliminate them altogether in the second iteration of our prototype.

Some driving questions that we’re aiming to answer during our user testing sessions include: Do we get more false positives when utilizing gesture-based control or a voice-activated interface? Are our current controllers well-suited to the task of audio recording? Are there any immediate features that come to mind when working with a gestural/voice controlled recording interface? Are either of these controllers an actual improvement on existing alternatives? Which controller feels more natural? Which is easier to use? Is there anything in our application that could be made clearer or more understandable? Are there any features that require more than a quick explanation to grasp?

From feedback we’ve received during informal studio sessions and thoughts we’ve generated during time spent with our prototype, we’ve developed the following hypotheses about our forthcoming user tests. For one, we hypothesize that users will find the voice-controlled interface more intuitive (because saying “record” makes more sense to cue recording than swiping your hand to the side). We also hypothesize that users will find the staging area and the gestural-based controller the most prone to needing explanation (because there isn’t a direct mapping between the colored bar at the top of our app and the need to stand in the middle of it to activate recording, etc.). Lastly, and most importantly, we hypothesize that users (and most notably, guitarists) will prefer our controllers (or a combination of them) to current methods for cuing recording and playback.

Methods

In terms of study design, we plan on running a within-subjects A/B test using both controllers for our application (gestural & voice) and will alternate users between the two controller types (A/B then B/A, etc.). Users will be given instructions on how our application works (explanation of staging area, etc.) and how to gesture/dictate voice commands in order to cue recording, stop recording, and start playback. Currently users can only record single tracks and each new track will overwrite the previous one, so we’ll also explain this to users.

After users understand the basic workings of our app, we will instruct them to record a track, stop their recording, and cue playback. During this time we will be observing the number of false positives that occur and the number of misses they commit (i.e. - trying to do something and failing). On the qualitative side, we intend to survey users about their experiences and which controller they preferred. We will also ask them if they think there are any immediate features that they think should be included in our app. We plan on recruiting a minimum of 6 users (likely more), with the goal of targeting guitarists and/or musicians for our tests. Because our application isn’t yet tailored exclusively toward guitarists, we feel it’s important to get feedback from a range of users in order to maximize the variety of opinions we’re receiving. As such, we intend to recruit both amateurs and professionals and users who are both familiar and new to the Kinect. As far as how we’re actually going to recruit, we’ll likely rely on friends and dormmates who are musically inclined. Because the requirement is only 6 users, it shouldn’t be a problem to find enough people to test with (and as we mentioned, we’ll most likely recruit more than 6 participants to generate variegated, interesting feedback).

Results

We tested our application with seven different users and collected both quantitative and qualitative data during our various user testing rounds. On the quantitative side, we documented the number of mistakes users encountered while using each controller and made note of the task they were trying to accomplish when encountering those mistakes. This enabled us to get a statistical measure of the accuracy of each controller and gain a better understanding of the places in our app where users commonly got hung up. The breakdown of mistakes amongst users looks like this:

| Daniel Tublin | Scott Sakaida | Ian Montgomery | Nisha Garimalla | Erik Shoji | Dave Dolben | Ryan Brown | TOTAL | |

| Voice Mistakes | 1 | 0 | 1 | 1 | 0 | 0 | 1 | 4 |

| Gesture Mistakes | 1 | 2 | 2 | 0 | 2 | 5 | 1 | 13 |

In other words, on average, users encountered far less miscues when working with the voice interface than when working with the gesture-based controller (0.57 average compared to 1.857 average, respectively).

In terms of when these mistakes were actually encountered, on the voice command side, two users said “playback” instead of “play” to try and cue playback, one user accidentally said “stop” instead of moving off-stage in order to stop their recording, and the last user had to say “record” an extra time to start their recording. Looking at these results, it’s interesting to note that three out of the four errors were committed because users didn’t remember the directions they were given, whereas only one error actually involved a problem with the app itself.

On the gesture controller side, users most often encountered errors due to the Kinect not properly sensing their gestures or when they swiped in the wrong direction from what they were trying to accomplish. For example, Erik and Dave cued new recordings accidentally. In Erik’s case, he had forgotten which way he had to swipe his hand to cue playback, so he swiped to the right instead of the left, which started a new recording. In Dave’s case, the Kinect was likely confusing part of the guitar for his arm, because whenever he swiped his right hand far enough to the left to try and playback his previous recording, he accidentally cued a new recording instead (which should only happen during a right swipe gesture).

Far more qualitative data was collected during our user tests, which mainly took the form of observations and a series of questions that we asked users after they had successfully recorded and played back a track using both controllers. Although some of the questions changed to accommodate the feedback we were receiving, we were most interested in finding out which controller users preferred, which features were the most confusing and/or necessitated the most explanation, and how it felt to use our application with an instrument (if that specific user was in fact testing with one). The results, broken down by user and partially condensed, are as follows:

Daniel Tublin (non-musician, first time Kinect user - gesture then voice)

--Which controller did you prefer and why?

I preferred the voice interface. I felt it was easier to use than the gestural one because it made more sense to say “record” to start recording than swiping your hand to the right. There’s nothing distinct about moving your hand to the right to record or to the left to play something back.

--Which feature(s) were the most confusing/necessitated the most explanation?

Having playback and stop recording as the same gesture was confusing and the gesture-command pairings were difficult to remember after hearing them only once. That’s why I asked a few times for confirmation about the gestures. Also, if you hadn’t explained the staging area I definitely would’ve been confused about it.

--Are there any other gestures that come to mind that could be more helpful or useful for controlling our app?

A criss-crossing arms gesture might be a good addition (especially to stop recording) because it would allow you to get away from using one gesture to do two things.

Scott Sakaida (musician, first time Kinect user - voice then gesture)

--Which controller did you prefer and why?

I preferred the gesture controller because it’s an audio recording app and in the voice recording mode, the commands get in the way of the recording.

--Which features were the most confusing?

Probably just the second swipe left to cue playback because it’s the same as the stop recording gesture. The staging area also would’ve been confusing if you hadn’t talked about it, but once I understood what it was, it made sense.

--Which controller felt more natural and why?

The swipe one because you don’t have to move out of place to do it. It’s easy to stop and play by just moving your hand because they’re direct opposites. However, I did find it a little confusing that you guys combined the motion command of moving off stage with the voice commands. Why not just say “stop” and trim the last second of the recording?

--How was using the Kinect with an ukulele?

I liked it, but I would’ve liked to be closer to the computer at times. Also, if there wasn’t any interference from potentially saying “record” on accident, I might prefer the voice commands when playing an instrument because I can keep both hands on my uke.

--Any other feedback?

I feel like vocal interference can occur more often than gesture interference, which is something you should think about. You might include a countdown feature after you say “record” to eliminate that word from the actual recording. It would also be cool to give users the option to select between the two controller modes or allow them to record their own gestures and assign them to each function.

Ian Montgomery (musician, first time Kinect user - gesture then voice)

--Which controller was easier to use and which did you prefer? Why?

The voice one was easier to use because it worked the first time and taking your hands off the guitar while playing is inconvenient. I preferred the voice controller because it seemed more intuitive, although having to move off-stage to stop the recording in voice mode wasn’t exactly straightforward (although it worked so well I didn’t mind it).

--Did you find any features particularly confusing or in need of additional explanation?

Remembering which gestures go with what command (swipe right to start recording for example) was confusing at first. That’s why you had to remind me about the gestures so many times.

--How was using the app while holding an instrument?

I liked it, but having to take your hands off of the guitar to gesture was inconvenient.

--Are there any other gestures that come to mind that could be more helpful or useful for controlling our app?

Using your feet to control recording or playback would be a good addition because it would allow you to keep your hands on the guitar.

Nisha Garimalla (non-musician, experienced Kinect user - voice then gesture)

--Which controller did you prefer and why?

I liked the gesture controller better. It was more responsive than the voice interface. I feel that voice has a lot of variance whereas gestures are more easily tracked.

--Did you find any features particularly confusing or in need of additional explanation?

The gesture controller was a bit confusing because you used the same gesture twice to control stopping and playback, but it wasn’t too difficult to remember and makes sense given your target audience. Also, why did you choose to have the user walk off stage to stop recording instead of just saying “stop”? That doesn’t really make sense given the fact that the other two commands are voice-based. Maybe you could have the user take a step forward or do something less demanding than having to move entirely off-stage. Also, when I was trying to record for the first time, there wasn’t any confirmation that I was recording until I saw the waveform. It might be nice to include a countdown that confirms that you’re actually recording.

--Any other feedback?

You should combine the gesture and voice controllers. If you say a keyword, then the menu of voice commands appears. If not, then the app is entirely gesture based. You should also think of a way to make the staging area more clear or refine your clutch so it’s not as confusing to users.

Erik Shoji (non-musician, semi-experienced Kinect user - gesture then voice)

--Which controller was easier to use and why? Which did you prefer?

Voice was easier to use because it was easier to remember to say “record” and “play” than the direction I had to wave my hand in to control the app. I preferred voice because it involved less motions and the commands were more memorable.

--Did you find any features particularly confusing or in need of additional explanation?

It was tough to remember which direction to gesture in to cue playback because all of the gestures were swiping gestures and you reused the swipe left gesture twice.

Dave Dolben (musician, first time Kinect user - voice then gesture)

--In general, which controller do you prefer and why?

The voice interface because it works better right now. If the gesture interface worked as well, it would be nice because you wouldn’t get those additional words in your recordings.

--Which controller felt more natural?

The voice one because it makes sense to say “record” to start recording. If the gestures were very simple (like pointing at the Kinect or something), it might feel more natural than using voice, but right now, the voice interface makes more sense.

--How was using the app while holding an instrument? Were the gestures fatiguing or awkward to do with a guitar in your hands?

For the voice one, it didn’t make any difference, but for the gesture-based interface, the guitar was obviously interfering with the Kinect, so it made it really tough to use. As far as the actual gestures go, the guitar didn’t interfere with any of them which was nice. You could just gesture and then place your hands on the guitar.

--Did you find any features particularly confusing or in need of additional explanation?

It would be nice to have some text to explain what the indicator at the top of the screen does. You also might want to include a simple diagram to instruct users on how to perform the gestures properly.

Ryan Brown (musician, first time Kinect user - gesture then voice)

--In general, which controller do you prefer and why?

I prefer the gesture one because it seems high tech and more effective. I also didn’t have to move my head all the way to either side of the screen to stop recording. I also prefer the gesture one because the keywords “play” or “stop” might end up being in a song, which could cause all kinds of problems.

--Do you find any features particularly confusing or in need of additional explanation?

It was a little confusing having to record a new track to get rid of the old one. Does my old recording get deleted or is it cached somewhere?

--How was using the app while holding an instrument? Were the gestures fatiguing or awkward at all with a guitar in your hands?

It was really easy to use and the gestures were easy enough with a guitar in your hands that I don’t think they’d get fatiguing.

--Are there any other gestures that come to mind that could be more helpful or useful for controlling our app?

It would be nice if you added another gesture to save a recording or one to delete an old recording.

Discussion

Interestingly enough, there was a pretty even split between user preference for the voice controlled interface versus the gesture-based controller (four users preferred the voice controller and 3 preferred the gestural one). Those who preferred the voice interface felt it made more sense to say “record” to start a recording and “play” to play it back as opposed to arbitrarily swiping through the air to control recording and playback. Those who preferred the voice-activated controller (and even some users who preferred the gesture controller) also noted that they found themselves confused by the use of the same gesture to issue two different commands. From this, we now know that a single gesture should be paired with a single task, and that gestures should be as distinct as possible so users have an easier time recalling them and keeping them separate from each other in memory. We also know that if we’re going to create an app that relies on voice commands, we should stick almost entirely to voice control. Although moving off-stage is an effective way to stop a recording, a number of users found it confusing and difficult to do (especially when seated while playing the guitar).

Another important thing to note is the level of explanation required to understand some of the features of our application. Without having the stage explained to them, most of the users (and likely all of them) wouldn’t have understood what the indicator at the top of the screen was. Additionally, we had to explain how to use our app to all of our users (for example, which voice commands to give and which gestures to use) and even after that, first time users had difficulty remembering the various commands (especially when using the gesture controller). As such, it’s important that we include documentation about how to use our app or create a diagram that appears within the app explaining how to use it properly.

In terms of shortcomings, it was difficult to find 7 guitarists who were available to test our application, so we relied on a few non-musicians to test it as well. This made it difficult to reliably predict how well our gesture-based controller would fare with a guitarist using it, but we did have four of our seven users test our app with instruments in their hands (three guitars and one ukulele). In addition, despite not being able to test our app with a guitar player each time, all of our non-musician users did provide insightful feedback that we’ll certainly consider in the next iteration of our prototype.

Implications

One of the big concerns with the voice-based interface was the fact that the keyword “record” might show up in recordings or accidentally get said during a recording and mess everything up. This is certainly an issue that we need to address, and we hope to combine some aspects of the gesture and voice-based controller in order to mitigate this problem. One suggestion that makes sense is to have a specific (and infrequently spoken) clutch word that brings up a list of available voice commands or sends our app into “listen” mode where users can then issue voice commands. We might also consider implementing a countdown that gets triggered after “record” is recognized so that word won’t appear in the recording. This would also let users know that their voice command has in fact been recognized and that they should prepare to record.

As far as the gesture controller goes, we will have to refine our gestures and make sure that we eliminate false positives, especially when users are trying to gesture with a guitar in their hands. One thought is implementing foot-controlled gestures. A guitarists’ feet are less likely to be confused for the guitar than his/her arms, so it makes some sense to go that route. We might also consider a gesture that has a user stick their arm out to the side instead of upward in order to get it as far away from the guitar as possible.

The idea of saving tracks is also intriguing and we will hopefully pursue this in the next iteration of our prototype. Right now, all of the tracks get saved to a users desktop, which can be quite surprising the first go around, especially if you record a number of tracks and then close the application. Deleting tracks is also a possibility, although not entirely necessary because only one user mentioned it and it doesn’t seem to be the most pressing issue right now.

VI. Functional Prototype II

After getting a lot of feedback from user testing, we slightly re-prioritized the list of things that we were hoping to tackle after Functional Prototype I in order to make our application maximally useful to our target audience. In addition to improving our multi-track support and adding other functionality which we had already been planning on adding, we also made a number of user experience improvements in order to make our app more usable and accessible.

UI Improvements

We made a number of improvements to the UI since the previous prototype, thanks in large part to the wide range of feedback we were able to solicit from User Testing last week. One thing that we had not initially prioritized, but was quickly unearthed during testing, was providing better feedback in error states. For example, we found that it was quite confusing and frustrating for users to determine whether or not they were being sensed by the Kinect properly. In response, we added visual feedback, including a "stage" that helps users identify where the Kinect's range is, and whether they are inside it. More specifically, the changes that we made to the UI include the following:

- Storyboard-enriched menus - we added both top- and right-side menus that make the process of using gestures for selection more intuitive. Part of the reasoning for this was the fairly even split we observed in user testing between preference for the speech and gestural interfaces. While we assumed the speech interface would be the overwhelming favorite, we learned that it was still important to provide a good gestural experience (especially given that both interfaces are not 100% accurate).

- Recognizers implemented for menu systems - as part of revamping our application's menus, we implemented gesture recognizers that could recognize gestures to select from menus (without annoying interaction with other menus and UI elements on the screen). We improved our initial naïve implementation of these recognizers with the Kinect Toolbox to ensure that we wait for the user skeleton to be stable before allowing him or her to select anything, which was very helpful in reducing accidental menu selections.

- New recorded waveform display - in order to both improve our UI and to rid ourselves of a nasty dependency, we moved to a new means of representing recorded waveforms in our application. The one downside is that the library that we are currently using adds a watermark (in order to upsell to a more expensive, commercial version). We have recently made contact with them in hopes of being able to get rid of the watermark, otherwise we may be forced to roll our own solution.

- "Stage" and user skeleton display - as we mentioned above, we added a "Stage" to the UI to help users identify when the Kinect was having trouble identifying and recognizing them.

Functionality Improvements

As we noted in our writeup for Functional Prototype I, there were a few very important features that we needed to a

- Multitrack support - One of the core missing features from our Functional Prototype I was the ability to easily record to and interact with multiple tracks. In our current version, a user can keep recording over the same track as many times as he or she likes until it's just right, then commit it to the "background track" in order to allow for future recording. While the UI shows all of the previously-recorded tracks being merged into one track, in reality the implementation keeps all of the tracks separate in order to maximize flexibility in post-production.

- Sample aggregator dependence - we largely eliminated our dependence on SampleAggregator, which was made even more crucial when we switched the way we represented recorded waveforms in the application.

- Metronome - while limited in its current implementation, we added a stub (with user interface to follow) for allowing the user to set the bpm for the song, and be able to play along with a metronome. Also, a fortunate consequence of this was our newfound ability to marshal events onto the UI thread so that events in different threads can still affect the UI.

VII. Final Presentation Materials

Video

Slide

Poster

Source Code

git@github.com:nhippenmeyer/CS247.git